Audio, Web and Worklets

The Web is the ultimate inner platform. Over the years, it has laboriously assimilated more and more operating system features. Regardless of whether you believe that makes sense, the Web has enormous momentum: its reach and accessibility are compelling, and so here we are, building signal processors in the web browser.

Audio as an Afterthought

My earliest recollection of audio on the web is of General MIDI tunes in an <embed> tag. Back then the Web was not really an app platform: there was no intention of programmatic control over creation or playback for such "embedded multimedia objects".

Gradually the platform got more capable. HTML5 brought the HTMLMediaElement family, including the <audio> tag. There was more programmatic control, and even the possibility of generating source material in Javascript. Real-time procedural audio was not really a design goal though: the platform suffered from a weakly performing language with garbage collection.

asm.js

V8 and friends made rapid strides in the early 2010s, incentivized by the emergence of the Web as an application platform. Javascript JiTs made heroic efforts to claw back numerical performance forfeited by a dynamic scripting language. Around this time, Mozilla pushed asm.js, a subset of Javascript more amenable to compiler optimization with linear memory. It turned out such a subset was a viable compile target for traditional native languages.

Web Audio

Coinciding with the Web platform moving beyond hypermedia, a new audio api more suited for procedural audio, the Web Audio API, was introduced in 2011. Like many audio stacks in modern operating systems, it was fashioned as a graph of nodes. Each browser would implement a bunch of audio processing primitives, like oscillators, envelopes and filters, and web apps could build and manipulate graphs of those primitives on the go.

But what would one do when none of the built-in nodes was quite what was needed?

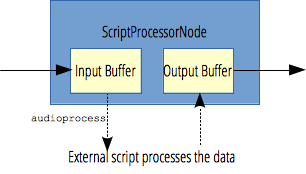

ScriptProcessorNode

ScriptProcessorNode was the final piece that allowed user-space generation of procedural audio on the web platform. Javascript finally got its mitts on raw sample data in a comfortable callback.

The figure by Mozilla Contributors is licensed under CC-BY-SA 2.5.

The figure by Mozilla Contributors is licensed under CC-BY-SA 2.5.

However, this is not hte end of our story. ScriptProcessorNode is flawed beyond redemption, and on the path to depreciation. To understand why it would never work, you need to consider the Javascript threading model. Or the fact that historically, there wasn't any.

Even if you carefully crafted high-performing Javascript that never triggered garbage collection, ScriptProcessorNode would still fail because it lives in the same context and event loop as your entire web application. Simply put, the lower bound for reliable latency was the longest duration of anything else synchronous your application does, because no audio processing could happen during that something else.

Consider graphics: maybe you were able to religiously maintain 60 frames per second by capping anything else at 16 milliseconds, which is not easy on the Web platform. For audio, that is an okay-ish but not great latency. In addition, any time you spend processing audio is subtracted from your frame budget. Prepare for the occasional major garbage collection or document reflow. In graphics, you drop a frame or two. For audio, it's snap crackle pop time.

WebAssembly

WebAssembly was the next major advance in the quest for performant low-latency work on the Web platform. It takes the idea of asm.js to its logical conclusion. Wasm is a compactly encoded binary instruction format that uses linear memory and typed primitives.

It is the ideal target for audio code on the Web, if only we could somehow avoid running in the main event loop...

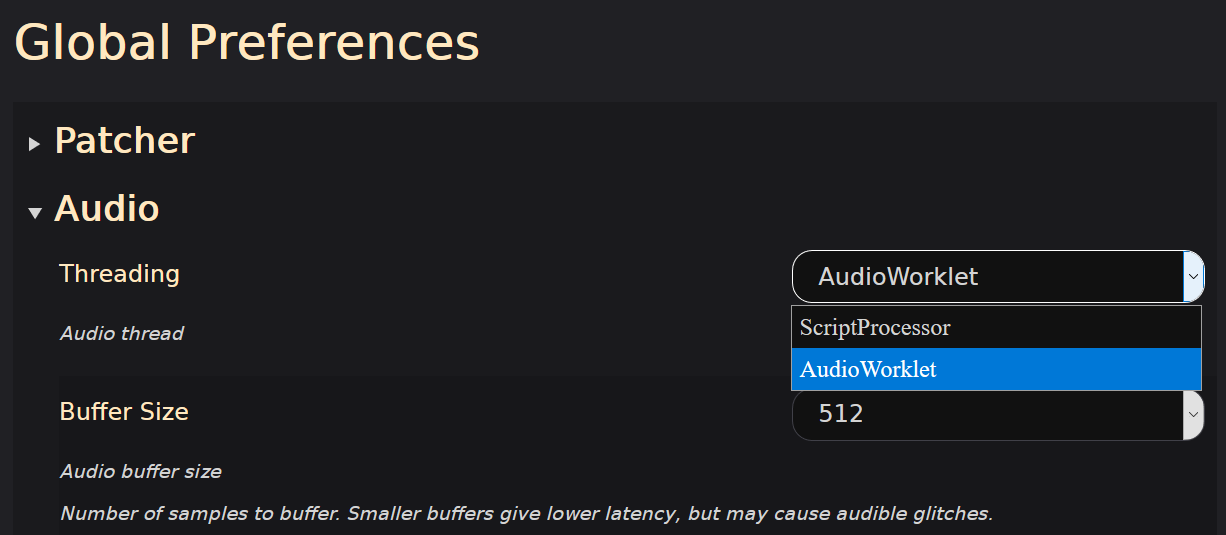

Enter AudioWorklet

AudioWorklet is the missing piece to our puzzle. It adds a dedicated Javascript context that runs on the audio thread, bringing the performance of a customized Wasm audio node up to the level of native Web Audio nodes. AudioWorklet is isolated from the main Javascript context, which brings the performance, but also means that any communication must be done via message queues

AudioWorklets were introduced in Chrome in 2017. For quite a while they remained exclusive to Google's browser, but that changed just now, as Mozilla just shipped AudioWorklets in Firefox!

This is a great time to introduce support in Veneer; you can try it in the latest snapshot by heading over to Project > Global Preferences and selecting the new audio threading model. Let me know if things break!

Threading in Veneer

Veneer uses multiple concurrent processes for better performance. While Kronos is fairly quick at compiling, it is still too slow to use synchronously from the UI. The native compiler runs in a dedicated Web Worker. Veneer sends code via a message queue, and receives binary Wasm blobs asynchronously in response. The browser prepares these for execution in another asynchronous process, which is the work queue for WebAssembly compilation. The resulting executable instance is connected to Web Audio via ScriptProcessorNode.

ScriptProcessor Architecture

The situation is slightly more complicated with AudioWorklet, as we introduce yet another Javascript scope. After compilation, the main thread generates a WebAssembly Module. The module contains metadata about IO, which is used in creating an AudioWorkletNode.

AudioWorklet Architecture

AudioWorkletNode is the control interface from the main Javascript context to the AudioWorklet. Actual DSP happens in AudioWorkletProcessor, and that is where our WebAssembly must run.

The main thread sends our new WebAssembly Module to the AudioWorklet context over the message queue. The module is instantiated worklet-side and associated with the AudioWorkletProcessor. Audio IO and the link between our AudioWorkletNode and AudioWorkletProcessor is handled by the Web Audio behind the scenes.

For interactive control, we send any parameter changes from the main thread over to the AudioWorkletProcessor over a message queue, and receive waveform peak data and any readout values in the other direction.

Bare Virtual Metal

There is one remaining twist, and it is not adult entertainment even if the heading made you think so.

Veneer creates and destroys signal processors and instances dynamically. Some of that must be handled manually, because even though Javascript handles garbage collection, the linear memory used by WebAssembly is not managed.

In the case of ScriptProcessorNode we can hitch a ride on the C runtime library: there is already a small WebAssembly image present in the main thread for parsing Kronos expressions. It is created by Emscripten, which gracefully provides us with malloc and free for memory management.

No such image is available to the AudioWorklet. To work around that, I wrote a tiny, simple block-splitting memory allocator in Javascript to manage the worklet heap. A nice reminder that Kronos processors operate just fine without the C runtime library, or without an operating system - in the enormously satisfying niche of bare metal programming. Even if in this case, our "bare metal" is a teteering tower of software abstraction called the web browser.

Enjoy!

I hope Veneer's new audio capabilities work well for you! Let me know how it turns out.